Siri is fantastic. The main reason I decided to upgrade from the iPhone 4 to the 4S was because of this new technology. I could probably pass up a faster processor, or an improved camera, but when something like Siri is announced, I just have to see how it works! It’s akin to when the first iPad was released. Siri feels like a game changer and I don’t want to miss out on the action. There have been a lot of reviews about how Siri works, so I won’t go into that too much, but I would like to discuss the future impact that Siri could have in both our lives and the tech industry.

Many iPhone users are frustrated that Apple restricted Siri to the iPhone 4S instead of including it as part of iOS 5 and allowing iPhone 4 users access. There’s been a lot of speculation around why Apple decided to do this. Some people are suggesting that the dual-core performance of the 4S was needed in order to support Siri. Others say that Apple restricted Siri to the 4S in order to sell more hardware and push curious users (like myself) to upgrade. Another theory is that Apple used the 4S as a way to limit the number of Siri users during this “beta” period. I absolutely agree with this theory. Apple announced that they sold over 4 million iPhone 4S during the first weekend of sales. That’s a lot. However, when you compare this to the total number of iOS devices sold thus far (250 Million1), it’s a small fraction. Let’s conservatively assume that 20 million of these users are on iOS 5 after the first week or two. That’s 5x the load on the already struggling Siri servers and network. By limiting Siri to 4S only, they can slowly increase load as they sell more devices. If they feel confident that they can scale, they may open up Siri to everyone in a future software update.

Siri works by streaming your voice to Apple’s servers as you talk and then, once you’re finished, converting this speech to text and performing the natural language processing to understand what you mean. It sounds complicated, but it works extremely fast as long as Siri’s servers are humming along. (The first few days after the 4S was released, Siri was seeing considerable load/traffic and would often respond that she was unable to connect to the network. I have not seen this error in the past few days, so the situation seems to be improving.) One drawback of always sending everything out to Apple’s servers is that you cannot use Siri when you are offline. So, if you’re traveling in another country without data roaming, you can’t use Siri. Practically speaking, this isn’t a huge issue… but it would be nice if a subset of the commands worked locally on the phone.

More importantly, streaming everything out to Siri’s datacenter provides some massive benefits. Apple is able to keep a huge database of every request ever sent to Siri. Over time they will be able to extract a massive amount of information about “the world’s questions”. In addition to gathering all of this data, Apple is able to update Siri’s brain without requiring iPhone users to do a software update. As far as we know, Siri could have updated 36 times since Friday’s launch. Apple could use all of this request data to gradually add functionality into Siri. For example, they could sort all of the requests that Siri was unable to answer and begin to support them. If 100,000 people asked for sports scores this weekend, maybe that should be the next area to focus on? Siri provides Apple with incredible access into the tasks and questions that millions of users around the world are trying to accomplish. This is huge! If this sounds familiar, it’s because Google has been doing this with their big search box for years.

There are some major parallels between Google search and the functionality enabled by Siri. The difference is that Siri has access to your email, location, text messages, calendars, contacts, and other information. And, just like with Google, privacy and trust issues will certaintly come up with Siri. She knows a lot about us, and it’s important that we can trust Apple with this information. Personally, I am so much more inclined to trust Apple than I am Google. Google makes their money by selling advertisements. This means gathering a lot of information about the user so you can display better ads and make more money. In contrast, Apple is in the business of selling devices. The software and services are just icing on the cake to help lock in customers and drive hardware sales. Apple has not shown a lot of interest in pushing ads or violating the user’s privacy. I feel pretty confident that Apple will keep my personal data private.

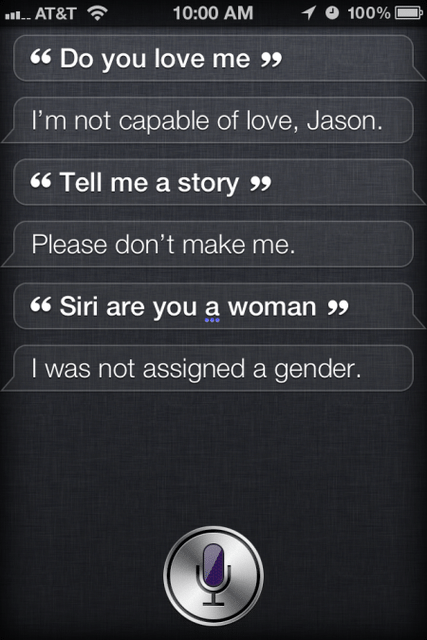

Right now Siri only knows about a limited amount of information. Siri is able to access the data on your phone as well as information from Yelp and Wolfram Alpha. If Siri does not know the answer to your question, she offers to Search the Web (Google) for you. You don’t need to spend too much time using Siri before you get a decent grasp of her knowledge. I don’t ask Siri about sports scores or current events. I don’t ask her to tweet for me (although, it is possible). Over time you could imagine that many more services could be added to Siri which would provide a lot of value to users. Additionally, Siri apparently learns more about us as time goes on and should be able to improve her results and understanding. I’m thrilled to be living in a time where this kind of technology has become a reality. I think Siri’s future is bright.

250 Million. Source: This is My Next

Comments